By Cdr Rahul Verma (r)

“The future of conflict will not be declared, it will be detected.”

On May 8, 2025, Indian drones struck key radar installations near Lahore. Pakistan responded with its drone waves. Neither country declared war. Welcome to the new normal, where conflict unfolds through autonomous systems, but never quite crosses the threshold into declared warfare. Since the early 2020s, the international security environment has trended away from decisive wars and toward continuous technological brinkmanship. Great powers and regional rivals alike are locked in what can be described as an algorithmic Cold War, a condition of persistent, low-visibility hostilities mediated increasingly by autonomous systems and AI. This is not an evolution of traditional warfighting, it is a strategic rupture. We have entered the era of “No War No Peace”, where states engage in hostile actions of “surveillance, sabotage, denial operations” without breaching formal thresholds of war. The doctrine of escalation dominance has been replaced by one of perpetual ambiguity. This paradigm shift is mirrored in India’s evolving defence doctrine, particularly with the rollout of the Integrated Unmanned Roadmap 2024–2034, which outlines a strategic vision for the deployment of autonomous systems across all three services. Developed in alignment with indigenous innovation efforts, the roadmap emphasizes persistent surveillance, AI-enabled strike coordination, and swarm-based deterrence. Organisations like DRDO, ISRO and some Startups, along with the private sector, are at the forefront of this transition, with joint ventures focusing on real-time ISR fusion, sovereign AI algorithms, and next-generation drone ecosystems capable of operating across contested and communications-denied environments. This institutional backing reinforces India’s commitment to preparing for a security architecture where the threshold for formal conflict is algorithmically managed, not politically declared.

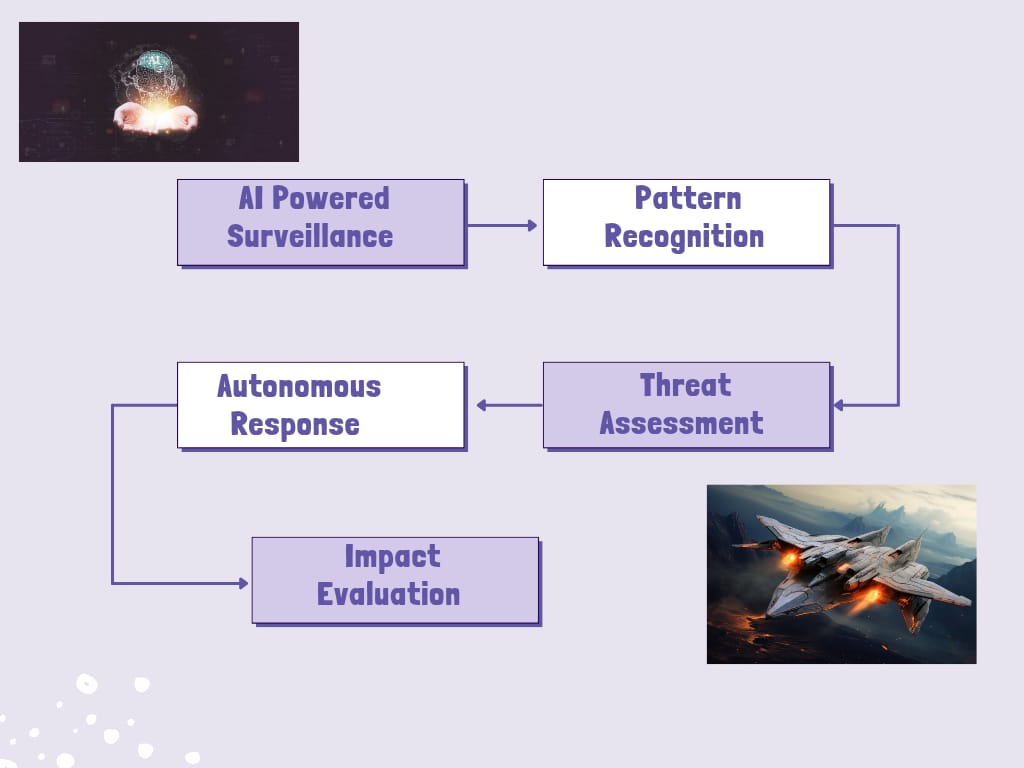

AI and unmanned systems are the backbone of this paradigm. They enable what political scientist Thomas Rid called “war without war”, a constant engagement without formal conflict. But unlike Cold War-era deterrence, which was human-calibrated, this new framework is machine-paced and data-defined. Drones act not only as tools of kinetic engagement but as sovereign sensors and psychological weapons, asserting presence and undermining adversary confidence. AI algorithms do not merely support decision-making; they increasingly replace it, especially in contested environments where human latency is a liability.

Strategic planners must now ask what deterrence looks like when driven by pattern recognition, not political signalling? How do we manage escalation when autonomous systems, trained on different datasets and doctrines, interpret the same input in radically different ways? This article dissects how AI and drones are shaping the “No War No Peace” environment not through speculative future scenarios, but through observable technological patterns, doctrinal shifts, and operational design. The goal is to illuminate the strategic logic and inherent risks of delegating national security to code and circuits in an era where silence between strikes may be the only peace we have left.

The Architecture of Perpetual Confrontation

The current landscape is defined not by kinetic exchange but by a persistent “operational hum” of unmanned surveillance, cyber probing, electronic jamming, and AI-based signal interpretation. This is the infrastructure of “No War No Peace.”

- Strategic Persistence. Unlike traditional deterrence, which relies on episodic force posturing, today’s unmanned systems offer continuous presence without provocation. High-altitude long-endurance (HALE) UAVs and low-observable micro-drones provide an uninterrupted ISR envelope, redefining sovereignty as a function of sensor reach.

- Plausible Deniability at Scale. AI enables both offensive and defensive operations that blur legal and ethical thresholds. Attribution becomes technically challenging and politically ambiguous. A drone strike on a radar station, a swarm disabling a naval maritime domain awareness net, or spoofed AI traffic mimicking troop movements are all acts of aggression designed to escape declaration.

- Grey-Zone Doctrine, Codified. What used to be tactical improvisation is now strategic design. Major military planners are building AI into doctrine itself— raining algorithms to operate with mission-type orders and decide without human input in communications-degraded environments. Strategic ambiguity is no longer a byproduct. It’s a feature.

In the India-Pakistan context, the “operational hum” of unmanned systems is no longer theoretical. Following the Pahalgam terror attack in 2025, India leveraged high-altitude drones and AI-enabled surveillance platforms to maintain persistent intelligence, surveillance, and reconnaissance (ISR) over the Line of Control (LoC) and key Pakistani positions. These systems, supported by real-time satellite imagery from ISRO and DRDO assets, enabled Indian forces to identify, track, and neutralize cross-border threats with unprecedented speed and accuracy. Pakistan, in turn, deployed modified commercial drones for arms delivery and tactical reconnaissance, challenging India’s airspace security. The resulting technological arms race has seen both sides invest heavily in counter-drone systems such as India’s Anti-Drone Systems, which intercepted over 80% of hostile UAVs during the crisis, further entrenching the No War No Peace paradigm along one of the world’s most volatile borders.

The Intelligence-Strike Feedback Loop

The traditional ISR-to-shooter chain relied on human cognition, data was filtered, discussed, and judged. Now, with AI-based decision engines, that loop is tightening into a near-seamless feedback cycle. This has transformed conflict in three ways:

- Predictive Targeting. Machine learning models, fed by multimodal data (SIGINT, OSINT, GEOINT), are generating pattern-of-life analyses that predict adversary behaviour. This shift targets from reactive to anticipatory, a move from “find and fix” to “forecast and pre-empt.”

- Distributed Lethality. Swarm-capable drones, linked via secure mesh networks, now operate as autonomous strike clusters. They are capable of local engagement decisions based on sensor inputs, enabling kinetic action in seconds rather than minutes or hours.

- Risk of Compression Errors. The compression of decision time has consequences. AI systems can misclassify intent, a reconnaissance drone as a munition, a jamming signal as a strike prelude. With humans removed from key decision points, the margin for political damage control has narrowed dramatically.

These encounters frequently play out in the so-called “grey zone”. Drone incursions, electronic attacks, and sabotage missions that stop short of open war but sustain a constant state of tension and attrition. The speed and ambiguity of these engagements, where autonomous systems can identify, target, and strike in minutes, often with minimal human oversight, demonstrate how the intelligence-strike feedback loop is now measured in seconds, not hours. For India, watching these developments closely, the lesson is clear. The integration of AI and unmanned systems into border security and crisis management is not just a technological upgrade, but a fundamental shift in the tempo and character of conflict.

Escalation Dynamics in an Autonomous Battlespace

Historically, escalation was human-shaped by doctrine, dialogue, and deterrent signalling. Autonomous systems short-circuit these buffers.

- Loss of Interpretive Common Ground. Two adversarial AI systems interpreting sensor data differently can reach contradictory conclusions about the same event. Without a shared language for “intent,” strategic stability becomes a matter of AI architecture and training data, not diplomacy.

- Adversarial AI and Escalation Traps. Deep learning systems are vulnerable to adversarial manipulation, inputs designed to deceive or distort perception. This presents a new category of threat are AI-triggered escalation by design, where one side feeds misleading data to provoke an automated response from the other.

- Black-Box Escalation. Advanced AI models often operate as black boxes, offering no explanation for how they reached a decision. This obscurity undermines post-incident analysis and complicates strategic communication, increasing the risk that misjudgements spiral out of control.

Strategic Outlook: Stability Without Peace

We are entering a security environment defined not by the balance of power, but by the balance of perception and prediction. In this world, peace is not a product of treaties or diplomacy. It is the byproduct of systems watching each other, restraining action through mutual algorithmic vigilance. This is stability without peace. A fragile, machine-mediated equilibrium where constant low-grade confrontation replaces the clarity of war and the resolution of peace.

(a) Tactical Encounters, Strategic Drift. The proliferation of unmanned systems has fractured the distinction between tactical operations and strategic consequences. A drone crossing a border was once a surveillance event, but now, risks are interpreted as aggression. An AI model recalibrating threat thresholds in real time might escalate posture based on inference, not intent. In this paradigm, strategic drift is the norm. Escalation is no longer a ladder, it’s a gradient, shaped by AI confidence intervals, real-time telemetry, and network latency, which we saw in Israel and Gaza’s ongoing conflict.

(b)The Illusion of Control. AI-based systems promise precision, efficiency, and control. But the more we delegate judgment to machines, the more we outsource risk, not reduce it. Strategic actors may assume they can pause or override autonomous systems at will. In reality, latency, degraded comms, and decision compression mean the window for human intervention may close before humans even realize a decision has been made. This creates what algorithmic warfare theorists have called the “illusion of reversibility”, a false sense that escalation can be dialled back, when in fact, the system has already moved on.

(c) Model Wars, Not Proxy Wars. Future conflicts will not just be fought by proxies on the ground but by competing AI models in digital space. This shift is already acknowledged in India’s strategic ecosystem. The AI Task Force on Defence, constituted by the Ministry of Defence, has emphasized the transformational role of artificial intelligence across ISR, autonomous platforms, and cognitive electronic warfare. These developments mark a redefinition of strategic capability. The next arms race is not for warheads, but for weights, biases, and datasets. As India invests in secure, sovereign AI through initiatives like iDEX and the Defence AI Council (DAIC), it underscores a doctrine where algorithmic dominance, not just kinetic power, determines deterrence and decision superiority. The most critical battleground will be cognitive. Whose system sees more, decides faster, classifies more accurately, and adapts in near real time?This leads to a paradigm of arms races without gunfire:

- Nations won’t just race to build more drones, they’ll race to train more robust models.

- Updates to algorithmic logic may trigger shifts in strategic balance, like missile deployments once did.

- Strategic surprise will no longer come from troop movements, but from software pushed to edge devices under new rules of engagement.

(d) Deterrence by Detection. Where nuclear deterrence was built on second-strike capability, unmanned deterrence is built on first-sight capability. If one side believes its drone mesh will detect and respond faster than the adversary, it may feel emboldened to act, confident in tactical containment. But this also increases the incentive for blinding the enemy first through jamming, spoofing, or AI-targeted deception, potentially triggering escalation as a defensive-first reflex.

This is the paradox of stability without peace. Each side believes it can deter the other by seeing more, reacting faster, automating harder, yet this very logic ensures continuous confrontation, as no side can afford to fall behind.

Conclusion: Control, Not Victory, Is the New Endgame

“Once machines are given the power to make life-and-death decisions, we lose the ability to hold anyone accountable.” I would quote Paul Scharre, author of “Army of None” to emphasize the point. The challenge before us is not preventing war. It is preventing the drift into war without decision, a slow slide driven not by intent, but by automation. In the new “No War No Peace,” we are not facing battlefields; we are navigating ecosystems of persistent machine interaction. Surveillance drones over contested zones. AI inference engines are classifying enemy signals. Swarms loitering in silence, awaiting programmed triggers.Victory is no longer a discrete event. It is being redefined as algorithmic dominance, as control over time, tempo, and perception. But this pursuit of speed and foresight comes at a cost: the erosion of human judgment from the chain of escalation.

We are not just building tools. We are coding judgment into machines. That means the burden of ethical clarity, legal accountability, and strategic constraint is heavier than ever. Delegation without oversight is not progress—it’s abdication. So the challenge for today’s defence leaders is not how to stop autonomy, but how to command it, not just technically, but politically and morally.

That requires investment in:

- Transparent and auditable AI architectures to ensure predictability and compliance with the laws of armed conflict

- Interoperable frameworks that reduce the risk of miscalculation between adversarial systems operating in the same space

- Human–machine teaming doctrines that retain moral agency where it matters most—at the point of decision

We may face a future where peace is not the absence of war but the silence between unmanned strikes. Done right, full autonomy won’t diminish the role of the war fighter. It will elevate it by removing the fog from data, the delay from action, and the error from fatigue, while preserving strategic judgment at the core of command.

Cdr Rahul Verma (r), former Cdr (TDAC) at the Indian Navy, boasts 21 years as a Naval Aviator with diverse aircraft experience. Seaking Pilot, RPAS Flying Instructor, and more, his core competencies span Product and Innovation Management, Aerospace Law, UAS, and Flight Safety. The author is an Emerging Technology and Prioritization Scout for a leading Indian Multi-National Corporation, focusing on advancing force modernization through innovative technological applications and operational concepts. Holding an MBA and Professional certificates from institutions like Olin Business School, NALSAR, Axelos and IIFT, he’s passionate about contributing to aviation, unmanned technology, and policy discussions. Through writing for various platforms, he aims to leverage his domain knowledge to propel unmanned and autonomous systems and create value for Aatmannirbhar Bharat and the Indian Aviation industry.